Celluster™ Reflex Compute

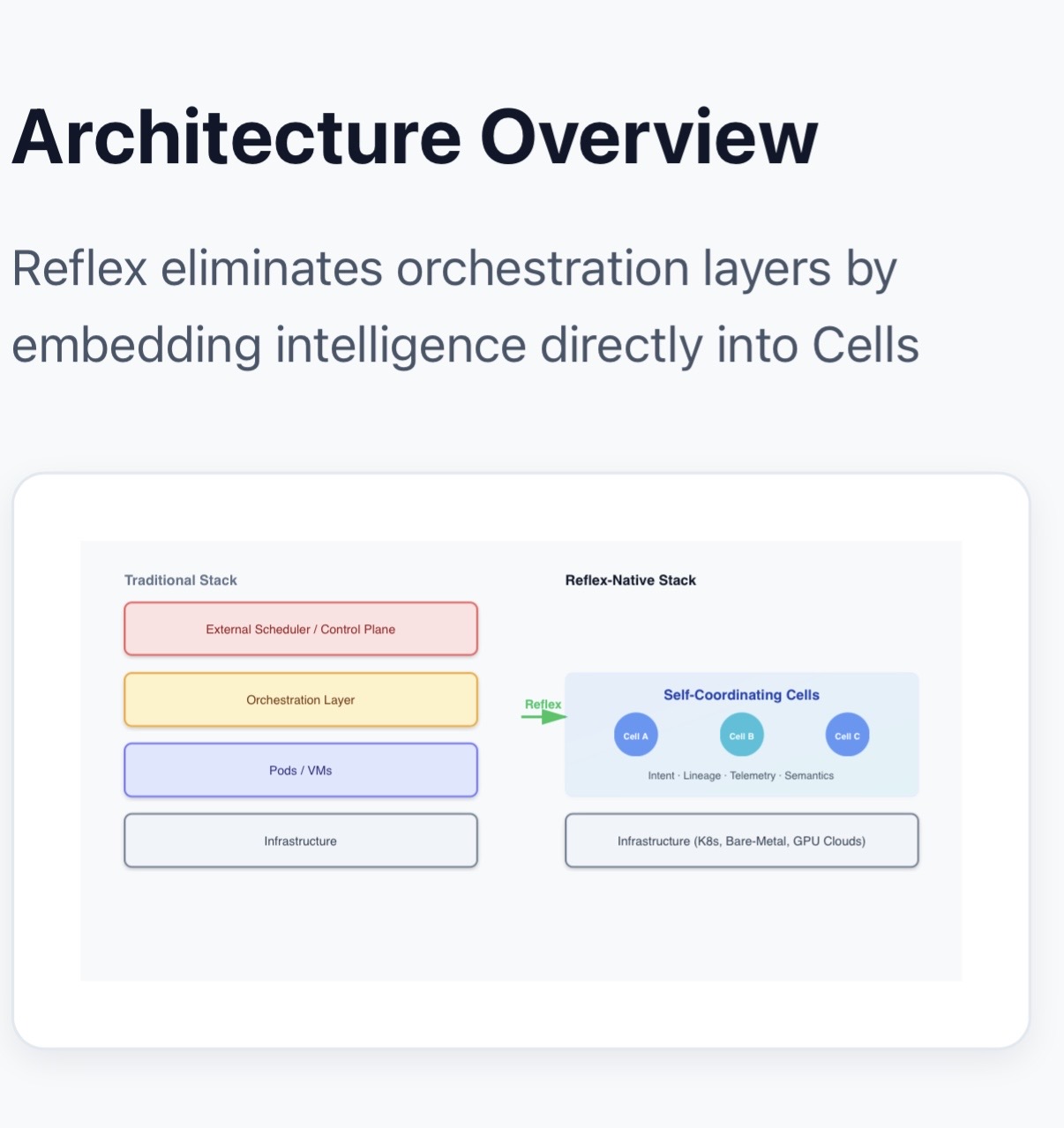

Beyond orchestration — a semantic substrate for any compute fabricCelluster is building Reflex-Native Infrastructure — intent-driven execution without orchestration. Workloads launch, upgrade, migrate, and adapt in real time based on intent and telemetry across CPU, GPU, and cluster fabrics, without control planes or schedulers.

From clusters to data centers — Reflex turns infrastructure into a living system.

The Reflex-Native Model

Elastic by awareness, not virtualization.

Reflex introduces Cells — self-aware units of identity, continuity, and intent — forming a living compute fabric. Instead of pods and VMs driven by external schedulers, Cells coordinate themselves through semantics, lineage, and telemetry.

- Instant launch — activation by intent, not choreographed YAML pipelines.

- Seamless upgrade — lineage-aware transitions with zero-downtime semantics.

- Frictionless migration — Cells move across zones and nodes without redeploys.

- Deep debuggability — full lineage and reflex replay across every Cell.

- Secure substrate — isolation and reachability enforced through meaning, not middleware.

- High feature velocity — infrastructure behaves like a live reflex graph, not a static spec.

Learn the primitives: Reflex Terminology.

Who We’re For

Reflex vs Traditional Infrastructure

| Dimension | Reflex-Native Infrastructure |

|---|---|

| Scaling Trigger | Semantic intent + live telemetry — infra scales by awareness, not exhaustion thresholds. |

| Coordination Model | Distributed, semantic coordination — no monolithic scheduler, less control-plane churn. |

| State & Isolation | Lineage-scoped state — deterministic reuse and isolation without noisy neighbors. |

| Feedback Loop | Telemetry as input, not noise — reflex emitters directly drive placement and lifecycle. |

| Policy & Placement | Policies as semantics — intent-aware placement replaces brittle config & queue logic. |

| Debug / Replay | End-to-end lineage — replay behavior across Cells instead of hunting logs & pods. |

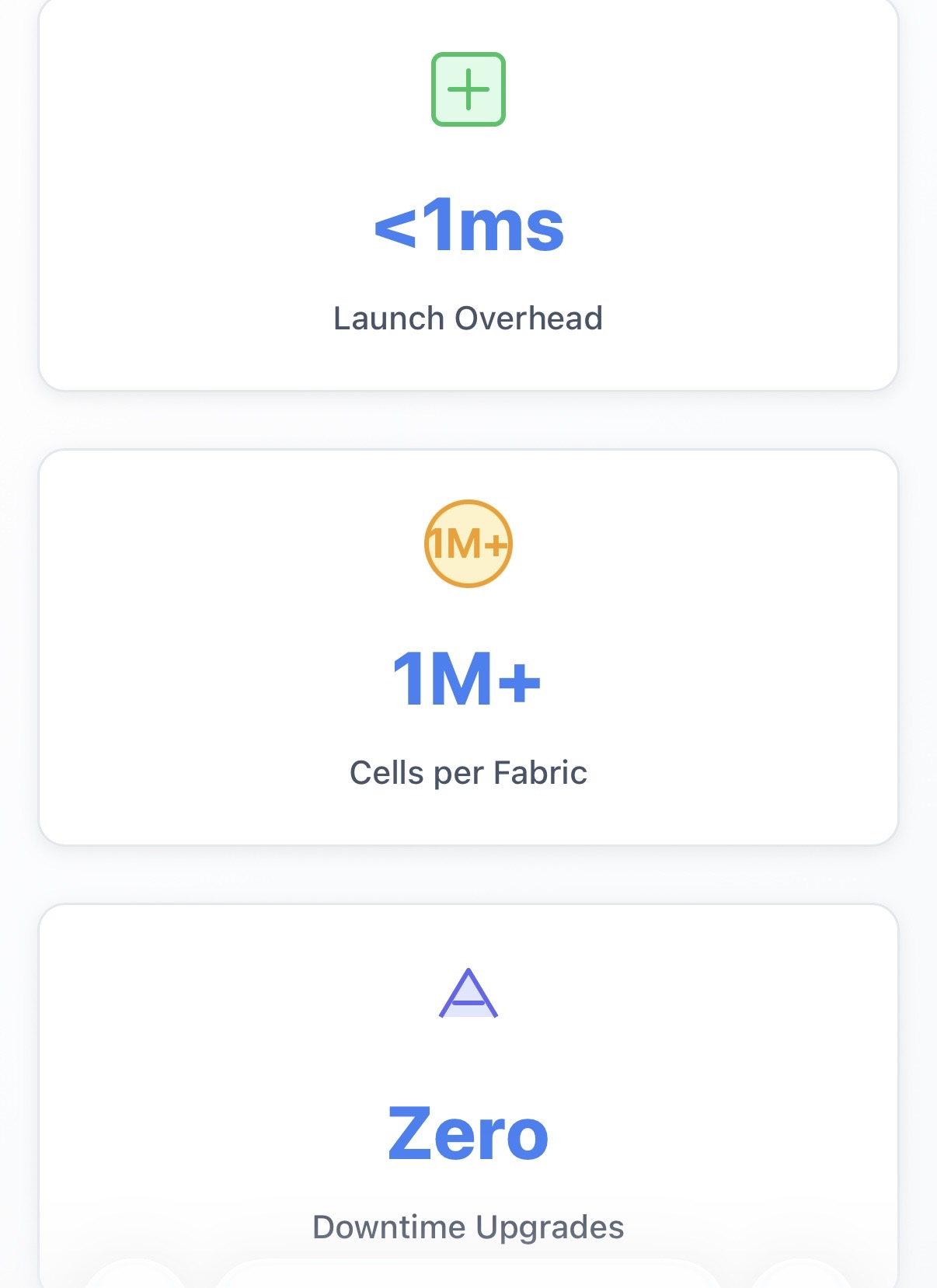

| Scale | 5K–10K Cells/node; 1M+ Cells/fabric — without global maps or polling loops. |

| Launch Overhead | Sub-ms activation — orchestration lag designed out. |

| Security | mTLS-backed semantic identity — “security through meaning,” not just IP ranges. |

Want a deeper, vendor-aware comparison? See Run:ai / NVIDIA vs Celluster →

Compatibility & Evolution

Reflex is orchestration-free, yet universally compatible.

Reflex Compute runs across Kubernetes, Slurm, bare-metal, hypervisors, and GPU clouds without demanding control-plane rewrites.

Reflex doesn’t compete with Kubernetes — it completes it. The Reflex substrate can operate inside Kubernetes, beneath it, or without it as a self-evolving infrastructure model.

As Celluster evolves, more orchestration patterns, policy layers, and meshes can be absorbed into Reflex semantics — shrinking complexity while preserving compatibility.

Hyperscale AI Networking Ready

Use your existing fabric. Let Reflex make it self-coordinating.

“Hyperscale networking for AI” optimizes links, switches, and congestion. Reflex optimizes meaning and coordination on top of those fabrics.

Reflex is not a switch, NIC, or fabric. It runs with InfiniBand, RoCE, NVLink, 400/800G Ethernet, SmartNIC/DPUs — stripping away orchestration overhead above them.

Where AI networks move tensors efficiently, Reflex moves intent efficiently — turning a fast network into a reflexive system.

Where Reflex Fits

| Layer | Examples | Reflex’s Role |

|---|---|---|

| GPU / AI Clusters | Lambda, CoreWeave, on-prem GPU fabrics | Turn GPU islands into a reflexive fabric. Reflex Cells encode placement, lineage, and runtime semantics so large fleets run hot without scheduler drag. |

| Kubernetes / Cloud Infra | AWS EKS, GKE, on-prem K8s | Extend, don’t fight Kubernetes. Reflex sits inside/beneath/alongside K8s, offloading lifecycle into semantics while preserving APIs. |

| Network Policy Layer | Calico, Cilium-style policies | Lift policies to intent. Map isolation and reachability into Cell semantics; interoperate with existing engines. |

| Sidecars & Mesh | Service mesh, sidecars | Sidecars as Cells. Implement mesh behaviors as Cells instead of per-pod sidecars; reduce bloat & control churn. |

| Data Center Design | Cisco, Arista, Equinix-style DCs | Topology as a reflex graph. Model placements & flows semantically — easier to evolve, reason, and automate. |

| Edge & Real-Time | Automotive, robotics, Telco, trading | Awareness at the edge. Cells respond to live signals instead of centralized polling. |

| Private 5G & Campus Wireless | Private 5G cores, UPF, campus controllers | Slices as semantics. Subscriber/slice/zone intents as Cells; eBPF datapath enforces reachability without heavy SDN controllers. |

| SD-WAN & SASE | Branch gateways, cloud edges | Intent-driven overlays. Sites, apps, users as Cell intents; overlays/paths derived from semantics, not static configs. |

| IoT & Industrial Edge | Factories, sensors, fleets | Per-class meaning. Device classes mapped to Cells with constraints; avoids brittle ACL sprawl. |

| Wi-Fi & Enterprise Zones | Controller-based WLAN, campus zones | Zones as Cells. Policies follow users/services, not SSID/VLAN tricks. |

| Multi-tenant SaaS & Platforms | SaaS control planes, PaaS, B2B platforms | Tenants as first-class Cells. Tenant intent drives isolation, routing, quotas end-to-end. |

| Future Absorption | Policies, schedulers, glue code | Designed to absorb complexity over time. More orchestration & SDN logic can migrate into reflex semantics instead of separate systems. |

Elasticity Without Virtualization

Reflex replaces the scheduler — not elasticity.

Virtualization made sense when hardware was scarce. In AI-native clusters, the real bottleneck is coordination overhead: wasted CPU on control loops and sidecars, complex codepaths to maintain, and control planes that do not scale linearly with fabrics or tenants.

Reflex Cells are elastic within their semantic boundary — the intent:

- Lineage Replay — on drift or pressure, a Cell replays launch semantics on the right node.

- Reflexive GC — unused resources decay or rebind automatically based on lineage.

- Intent-Scoped Sharing — Cells of the same intent reuse GPU/NIC pools deterministically.

Where VMs and pods virtualize hardware, Reflex virtualizes coordination — turning overhead into embedded logic and restoring compute to workloads.

Virtualization made hardware fungible. Reflex makes intent fungible.

Reflex SDK — Launching 2026

Everything you need to build on Cells.

The Reflex SDK exposes Cells, manifests, emitters, and integration hooks so teams can adopt Reflex semantics incrementally.

- Components: Reflex Manifest, Reflex Engine, Telemetry Emitters, adapter libraries.

- Documentation: Guides, design notes, and examples shipped with the SDK. See the Celluster OSS README.

- Alpha: Design partners for GPU fabrics & AI clouds (Q1 2026).

- Beta: Public OSS SDK (Q2 2026).

- Onramp: No proprietary certification required — if you understand modern infra and AI, you can reason about Cells.

Early access: sdk@celluster.ai

The Problem — The Hidden Orchestration Tax

Modern GPU and AI clouds silently burn capacity on orchestration: schedulers, daemons, sidecars, mesh, and duplicated telemetry stacks.

- Inference-grade CPU cycles consumed by “management” rather than models.

- Feature rollouts, debugging, and cluster expansion slowed by tangled control planes.

- Each new zone re-implements the same scaffolding for policy, metrics, routing, and safety.

As clusters scale, this tax becomes one of the largest drains on real ROI — not just in compute, but in engineering time and operational risk.

The Reflex Breakthrough — Self-Coordinating Infrastructure

From managed to reflexive.

Celluster Reflex™ encodes execution, design, optimization, and governance into the Cell model itself — moving from external orchestration to embedded semantics.

| Layer | Reflex Innovation | Outcome |

|---|---|---|

| Execution | Intent-driven Cells carry identity, ACLs, telemetry & lineage. | Less control-plane code; more usable capacity. |

| Design | Reflex Planner builds zones from semantic descriptors. | Faster cluster design with fewer moving parts. |

| Optimization | Embedded reflexes react to live signals instead of cron-like loops. | Continuous tuning without centralized schedulers. |

| Security & Governance | Membrane-level semantics + verifiable lineage per Cell. | Built-in auditability and policy clarity. |

Result: higher utilization, faster evolution, and an architecture that scales in meaning, not just in nodes.

Core IP filed Oct 2025 — Intent-Based Reflex Cell Architecture for AI-Native Infrastructure.

Pilot Program & Partnership Paths

For teams ready to lead the next wave of AI infrastructure.

Celluster is opening a limited design-partner track to validate Reflex in real GPU and hybrid environments.

- Target outcomes: efficiency gains, leaner SRE load, faster cluster instantiation.

- Collaborative measurement: joint ROI and architecture reports; shared learnings for both teams.

- Strategic upside: co-design rights, roadmap influence, and category narrative advantages.

Paths include:

- Design Partner: Run Reflex SDK and Cells on a focused slice of your cluster.

- Strategic Integration: Embed Planner + Runtime into your AI cloud or platform.

- Deeper Alignment: Explore licensing or corporate development aligned with Reflex-native roadmaps.

Celluster Reflex™ can amplify your cloud’s story — or someone else’s.

Founder Note

Built independently from concept to working prototypes, Celluster Reflex is engineered for practitioners who feel the orchestration tax every day.

If you are designing GPU clouds, AI fabrics, or sovereign infrastructure and want to define what comes after “managed Kubernetes,” Reflex is your substrate.