Celluster™ Intent Mesh

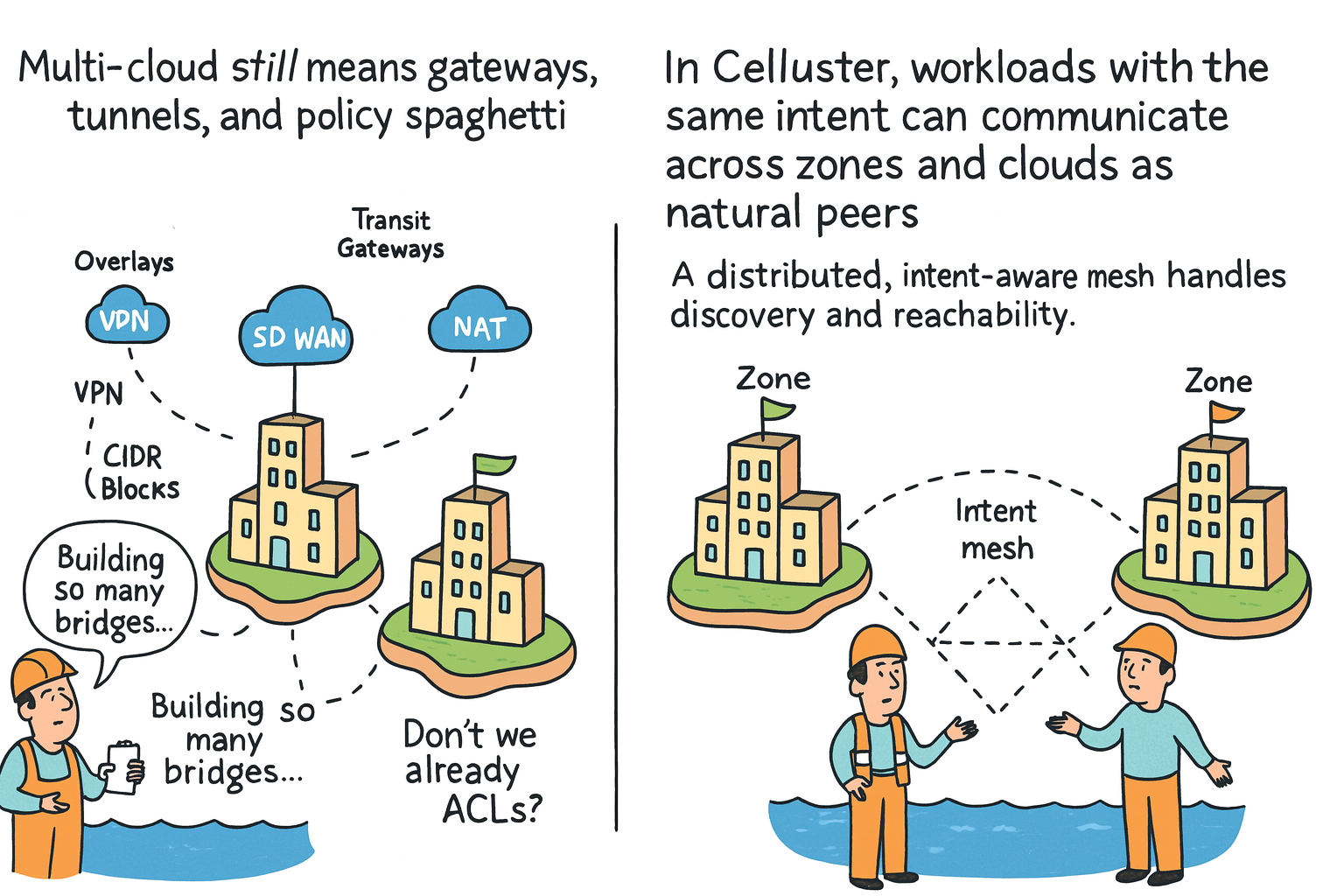

Inter-cloud & inter-zone, without policy spaghetti.Multi-cloud networking still looks like a construction project: VPNs, SD-WAN, transit gateways, overlays, NAT, and thousands of rules just to let two apps talk. Every new zone, VPC, or region means building more bridges.

Celluster flips this: workloads with the same intent discover each other as natural peers across zones and clouds, connected by an intent-aware mesh instead of layered gateways.

Multi-Cloud Today

Gateways, tunnels, and policy spaghetti.

To connect workloads across zones, regions, or clouds today, teams stack:

- Transit gateways and SD-WAN meshes.

- VPN tunnels, overlays, and NAT hops.

- CIDR blocks, VRFs, and route tables per environment.

- Duplicated ACLs across firewalls, meshes, and cloud security groups.

Every new project, region, or tenant adds more:

Connectivity works, but the graph becomes fragile and hard to reason about.

Celluster Intent Mesh

Peers, not pipes.

Celluster starts from a different question: instead of wiring networks first and asking what may cross, it asks:

“Which workloads share an intent, and where are they allowed to talk?”

The intent mesh is a distributed, intent-aware layer that:

- Lets workloads with the same intent see each other as peers across zones and clouds.

- Derives reachability from intent + tenant + zone, not from hand-crafted gateway rules.

- Keeps connectivity symmetric: if it’s allowed one way, it’s allowed the other, by design.

Zones and clouds become coordinates inside the mesh, not reasons to build another gateway.

Gateways vs Intent Mesh

What changes when intent becomes the routing surface.

| Aspect | Traditional Multi-Cloud | Celluster Intent Mesh |

|---|---|---|

| Unit of design | VPCs, subnets, gateways, tunnels. | Workloads and intents: “who should talk to whom,” independent of cloud. |

| Reachability | Static routes, security groups, firewall rules. | Derived from intent, tenant, and zone; expressed once, applied everywhere. |

| Change management | Tickets to update gateways and ACLs per environment. | Update intent; mesh adapts across zones and clouds in a coordinated way. |

| Failure & drift | Hidden asymmetries, missing rules, “works in one region, broken in another.” | Single source of truth for who may connect; less room for drift. |

| Onboarding a zone | New CIDR plan, new tunnels, new policy copies. | Attach the zone; intents already know who should be reachable there. |

Inter-Zone Migrations in Milliseconds

Connectivity that survives the move.

Because workloads are addressed by intent and zone, not by one static placement, moving them between zones or clouds is a first-class operation:

- Bring up a new copy of the workload in a different zone or cloud.

- Attach it to the same intent and tenant.

- Let the mesh shift traffic and clean up the old location.

The network doesn’t need to be re-designed every time you rebalance capacity or costs.

Migrations become part of the reflex story, not a separate connectivity project.